AI search has become one of those areas that everyone is talking about, but the mechanics behind it are still a bit of a mystery. Tools like ChatGPT, Gemini and Copilot are now part of the fabric of search, but the way these systems select, interpret and summarise information isn’t explained in the same way traditional search ranking has been over the years. They’re influencing discovery, yet they’re doing it with far less clarity around how the answers are formed...(welcome to the unknown).

Right now, I don't think anyone has the full rulebook. There’s no industry agreed consensus on what “AI visibility” or "AEO, GEO, AIO" (pick your acronym of choice) even means in practice, and theories are changing daily. So, while the ground moves underneath us, we're staying curious, testing and having a bit of fun while we do it.

So with that in mind, we've set up a couple of practical experiments to help us understand what AI pays attention to and how websites might evolve to be AI ready for the years ahead.

One of the clearest messages from Google over the past year has been around transparency. Google hasn’t objected to AI-assisted content - they've objected to content that isn’t helpful, isn’t clearly written for people, or doesn’t explain its purpose. Their guidance emphasises:

In short: transparency builds trust, and trust supports visibility.

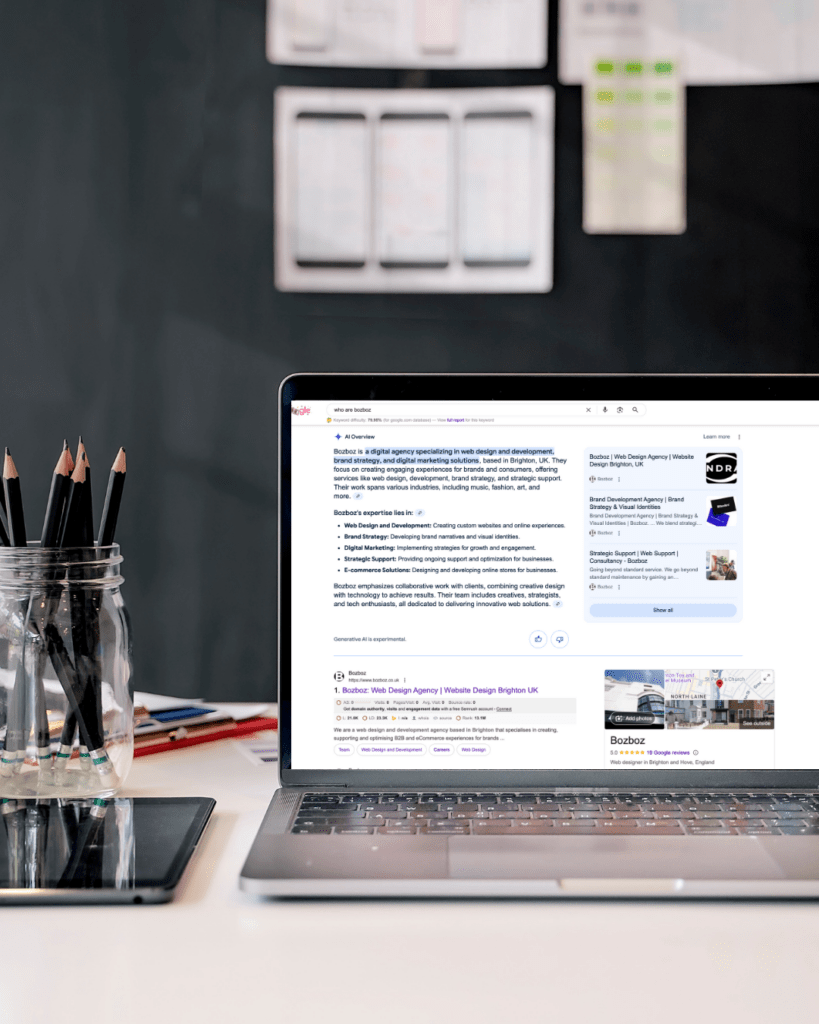

So on a selection of recent Bozboz articles, we’ve introduced a small AI disclosure box. It follows you down the left-hand side of the page and gives a brief explanation of how AI supported the piece and how the content was reviewed. We're trying to set expectations early on and tell users / search engines "hey, we've used AI, but for good reason - here's why".

For readers, transparency often creates confidence. For search engines, it aligns with what Google has been hinting at through its Helpful Content and E-E-A-T frameworks. And for us, it sets a standard we can easily maintain and include in our "playbook" for content creation.

We’re not expecting dramatic changes overnight, but we are looking to understand:

It’s early days, but we're committed to tweaking and measuring. We've set up some tracking with one of our marketing tools, SEMrush, and we'll see how it plays out over the next few months.

The second experiment is more technical but just as exploratory.

There’s a growing murmur in parts of the AI community about a cheeky little file called an llms.txt file. The idea of an llms.txt file is simple enough - it gives AI crawlers a clear, structured description of your business. It sits quietly alongside robots.txt and sitemap.xml, but instead of telling search engines what to index, it tells AI models what your organisation does, who you help and other core information you feel is important for AI to know about you.

For context: websites often use small “behind the scenes” text files to help different systems understand what’s on the site. For example, robots.txt tells search engines which parts of a website they should or shouldn’t crawl, and sitemap.xml gives them a structured list of key pages. These files don’t change how a site looks - they simply guide machines. llms.txt follows the same idea, but it’s designed for AI tools rather than traditional search engines.

It’s not just us taking a look at llms.txt. A few developer platforms and AI-heavy organisations have added the file to parts of their sites to see whether it helps. It’s not mainstream yet, and there’s no official endorsement from the big AI providers, but there’s enough early tinkering out there to make it worth exploring - so we are.

On our own site, our file outlines:

It isn’t bloated, and it isn’t written to “game” anything. It’s simply a structured, human-written (AI supported) signpost - and if AI systems begin to look for this format, we’ll benefit from being early adopters and have some experience to build on. There's also more comprehensive llms.txt files you can add, but we're not there yet.

What we’re watching for is straightforward:

We're keen to see what happens with this one - and if you're interested in getting an llms.txt file on your site, we can help. Click here to learn more

Clients and peers are asking the right questions - How do we appear in AI search? What should we be doing now? How much of this is noise?

The honest answer is nobody knows exactly although some are further along the journey than others. But there are themes you can rely on: clarity, transparency, useful content, strong information architecture. These are all safe bets regardless of where AI search ends up.

Our experiments will help us understand and validate ideas instead of relying on pure hearsay or guesswork. Plus, we don’t want to recommend tactics we haven’t tried ourselves, and we don’t believe in over-claiming on ideas that aren’t proven. By testing these things early, we can give clearer, calmer guidance later - and avoid the hype cycles that often surround new technology.

We’ll keep tracking what happens as these experiments run in the background. Over time, we’ll be looking at:

And when there’s something meaningful to share, we’ll publish an update.

For now, this is simply the start - a couple of steps towards understanding how AI might shape search behaviour. If you’re curious about running similar experiments on your own site, or you’d like help adapting your content for this rollercoaster of digital emotion, we’re always happy to talk through the detail.

If you want to showcase your offering, convert more leads, provide resources, or all of the above, we can build a website that separates you from the competition.